Who talked about us

The Twelve Labs Video Understanding Platform offers semantic search functionalities that analyze a video's context, concepts, relationships, and semantics, yielding more relevant results than standard YouTube or TikTok searches. This type of search interprets the meaning of your search queries, focusing on the relationships between different concepts and entities rather than just finding the video clips that match your search terms.

Challenges in identifying suitable influencers

Identifying the ideal YouTube or TikTok influencer for your brand is essential. The most effective partnerships form organically with influencers already discussing your products or similar brands. However, finding influencers who discuss related products can be challenging, especially since YouTube and TikTok searches may overlook content that does not explicitly mention your brand name or other specific words.

Overview

This guide is designed for developers with intermediate knowledge of Node.js and React. After completing this guide, you will learn to integrate the Twelve Labs Video Understanding Platform's semantic search functionalities into your web application.

The application consists of a Node.js backend and a React frontend:

-

The backend is an intermediary between the Twelve Labs Video Understanding platform, the frontend, and YouTube. It uses the Express web framework.

-

The frontend is structured as a typical React application, and it uses React Query, a library for fetching, caching, and updating data.

Prerequisites

- You're familiar with the concepts described on the Platform overview page.

- Before you begin, sign up for a free account, or if you already have one, sign in.

- You have a basic understanding of React (components, state, and props) and Node.js.

- You're familiar with using command line interfaces and GitHub.

- Node.js is installed on your system.

- Nodemon is installed on your system.

Run the application

-

Clone the Who Talked About Us GitHub repository to your computer.

-

Retrieve your API key by going to the API Key page and selecting the Copy icon at the right of your API key.

-

Retrieve the base URL and the latest version of the API. Using these values, construct the URL of the API. For instructions, see the Call an endpoint page.

-

Create a file named

.envwith the key-value pairs shown below in the root directory of your project, replacing the placeholders surrounded by<>with your values:REACT_APP_API_URL=<BASE_URL>/<VERSION> REACT_APP_API_KEY=<YOUR_API_KEY> REACT_APP_SERVER_URL=<SERVER_URL> REACT_APP_PORT_NUMBER=<SERVER_PORT>Example:

REACT_APP_API_URL=https://api.twelvelabs.io/v1.2 REACT_APP_API_KEY=tlk_1KM4EKT25JWQV722BNZZ30GAD1JA REACT_APP_SERVER_URL=http://localhost REACT_APP_PORT_NUMBER=4000 -

To install the dependencies, open a terminal window, navigate to the root directory of your project, and run the following command:

npm install -

To start the backend, enter the following command:

nodemon server.jsYou should see an output similar to this:

[nodemon] 3.0.1 [nodemon] to restart at any time, enter `rs` [nodemon] watching path(s): *.* [nodemon] watching extensions: js,mjs,cjs,json [nodemon] starting `node server.js` body-parser deprecated undefined extended: provide extended option server.js:122:14 Server Running. Listening on port 4001 -

To start the frontend, open a new terminal window, navigate to the root directory of your project, and enter the following command:

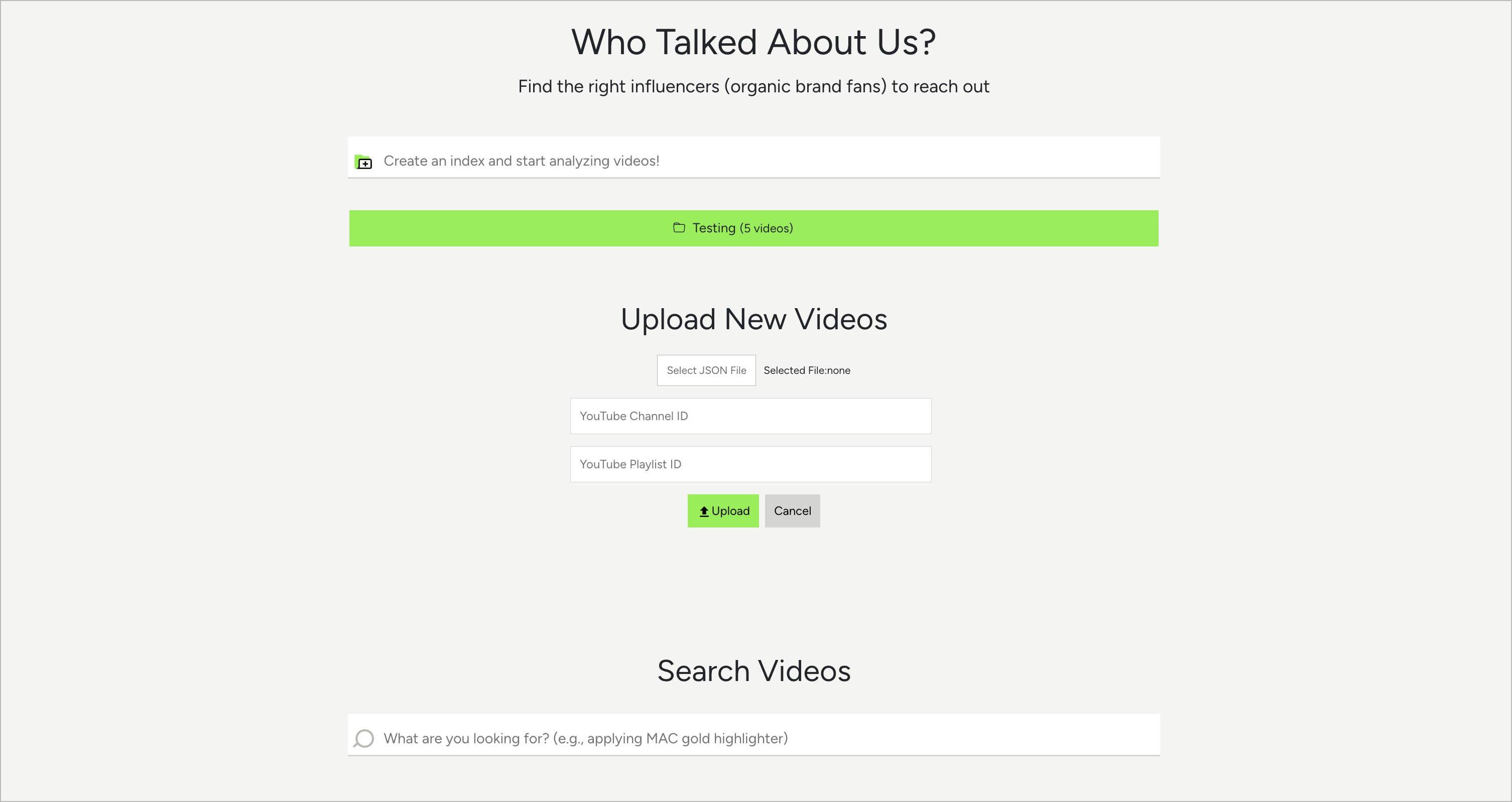

npm startThis will open a new browser window displaying the application:

-

Typically, using the application is a three-step process:

- Create an index.

- Upload videos.

- Perform search requests.

Integration with Twelve Labs

This section provides a concise overview of integrating the "Who Talked About Us" application with the Twelve Labs Video Understanding Platform for semantic searches.

Create an index

To create an index, the application invokes the POST method of /indexes endpoint with the following parameters and values:

| Parameter and value | Purpose |

|---|---|

engine_id = marengo2.5 | Specifies the video understanding engine that will be enabled for this index. |

index_options = ["visual", "conversation", "text_in_video", "logo"] | Specifies the types of information within the video that the video understanding engine will process. |

index_name | Indicates the name of the new index. |

Below is the code for reference:

app.post("/indexes", async (request, response, next) => {

const headers = {

"Content-Type": "application/json",

"x-api-key": TWELVE_LABS_API_KEY,

};

const data = {

engine_id: "marengo2.5",

index_options: ["visual", "conversation", "text_in_video", "logo"],

index_name: request.body.indexName,

};

try {

const apiResponse = await TWELVE_LABS_API.post("/indexes", data, {

headers,

});

response.json(apiResponse.data);

} catch (error) {

response.json({ error });

}

});

For a description of each field in the request and response, see the API Reference > Create an index page.

Upload videos

To upload videos, the application invokes the POST method of the /tasks endpoint with the following parameters and values:

| Parameter and value | Purpose |

|---|---|

index_id = indexId | Specifies the index to which the video is being uploaded. |

video_file = fs.createReadStream(videoPath) | Represents video to be uploaded, passed as a stream of bytes. |

language = en | Specifies that the language is English. |

Below is the code for reference:

const indexVideo = async (videoPath, indexId) => {

const headers = {

headers: {

accept: "application/json",

"Content-Type": "multipart/form-data",

"x-api-key": TWELVE_LABS_API_KEY,

},

};

let params = {

index_id: indexId,

video_file: fs.createReadStream(videoPath),

language: "en",

};

const response = await TWELVE_LABS_API.post("/tasks", params, headers);

return await response.data;

};

For a description of each field in the request and response, see the API Reference > Create a video indexing task page.

Search

To perform search requests, the application invokes the POST method of the search endpoint with the following parameters and values:

| Parameter and value | Purpose |

|---|---|

index_id = request.body.indexId | Specifies the index to be searched. |

search_options = `["visual", "conversation", "text_in_video", "logo"] | Specifies the sources of information the platform uses when performing the search. |

query = request.body.query | Represents the search query the user has provided. |

group_by = video | Indicates that the results must be grouped by video. |

sort_option = clip_count | Indicates that the videos in the response must be sorted by the number of clips that match the query. |

threshold = minimum | Specifies that the platform must return only the results for which the confidence that the result matches your query equals or exceeds medium. |

page_limit = 2 | Specifies that the platform must return two items on each page. |

Below is the code for reference:

app.post("/search", async (request, response, next) => {

const headers = {

accept: "application/json",

"Content-Type": "application/json",

"x-api-key": TWELVE_LABS_API_KEY,

};

const data = {

index_id: request.body.indexId,

search_options: ["visual", "conversation", "text_in_video", "logo"],

query: request.body.query,

group_by: "video",

sort_option: "clip_count",

threshold: "medium",

page_limit: 2,

};

try {

const apiResponse = await TWELVE_LABS_API.post("/search", data, {

headers,

});

response.json(apiResponse.data);

} catch (error) {

return next(error);

}

});

Note:

The platform supports both semantic and exact searches. To indicate the type of search the platform must perform, use the

conversation_optionparameter. The default value issemantic. Without this parameter in the request, the platform executes a semantic search.

For a description of each field in the request and response, see the API Reference > Make a search request page.

Pagination

To improve the performance and usability of the platform, the /search endpoint supports pagination. After the first page is retrieved, as shown above, the application retrieves the rest of the pages by calling the POST method of the /search endpoint with the identifier of the page to be retrieved as a path parameter.

Below is the code for reference:

/** Get search results of a specific page */

app.get("/search/:pageToken", async (request, response, next) => {

const pageToken = request.params.pageToken;

const headers = {

"Content-Type": "application/json",

"x-api-key": TWELVE_LABS_API_KEY,

};

try {

const apiResponse = await TWELVE_LABS_API.get(`/search/${pageToken}`, {

headers,

});

response.json(apiResponse.data);

} catch (error) {

return next(error);

}

});

For more details on how pagination works, see the Pagination > Search results page.

Next steps

After you have completed this tutorial, you have several options:

- Try out the deployed application: Visit the live demo to interact with the application.

- Use the application as-is: Inspect the source code to understand the platform's features and start using the application immediately.

- Customize and enhance the application: This application is a foundation for various projects. Modify the code to meet your specific needs, from adjusting the user interface to adding new features or integrating more endpoints.

- Explore further: Try the applications built by the community or the partner integrations to get more insights into the Twelve Labs Video Understanding Platform's diverse capabilities and learn more about integrating the platform into your applications.

Updated about 2 months ago