Model context protocol

The TwelveLabs Model Context Protocol (MCP) server connects your AI assistant to video understanding capabilities. You can search videos, generate summaries, and extract insights using simple natural language prompts.

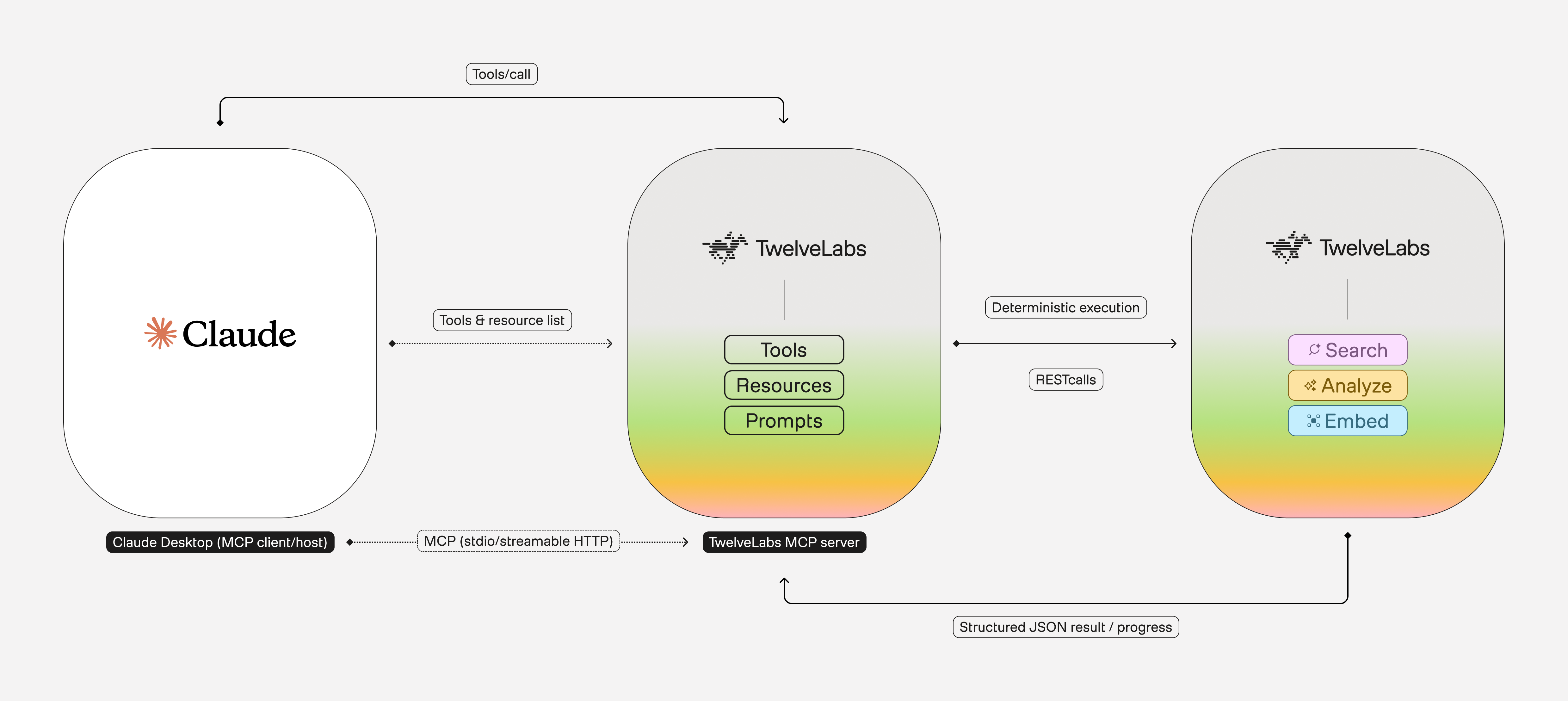

The following diagram illustrates the architecture of the TwelveLabs MCP server and how different parts interact:

Key features:

- Index management: Create, list, and delete indexes to organize your video content efficiently.

- Video indexing: Upload and index videos to make them searchable and analyzable.

- Video search: Search through indexed videos using natural language queries to find specific moments and scenes.

- Video analysis: Generate titles, topics, hashtags, summaries, chapters, and highlights from your video content.

- Video embeddings: Create and retrieve video embeddings for advanced AI applications and custom workflows.

- Onboarding assistance: Get guided help to onboard faster on the TwelveLabs platform.

- Chainable workflows: Combine multiple video analysis tools in sequence to create complex Retrieval-Augmented Generation (RAG) workflows.

- Universal compatibility: Works with any MCP-compatible client, including Claude Desktop, Cursor, Windsurf, and Goose.

Use cases:

- Meeting analysis: Summarize meeting recordings and find specific discussion points using natural language queries.

- Sports highlights: Search through game footage to find specific plays like “fourth-quarter three-pointers.”

- Media production: Locate scenes where products appear in videos and generate promotional content summaries.

- Training content: Search webinars and presentations for specific topics like “final chart presentation” or key learning moments.

Key concepts

This section explains the key concepts and terminology used in this guide:

- Indexes: Organized collections that store videos, making them searchable and analyzable.

- Video indexing: The process of uploading and processing videos.

- Semantic search: A method of finding video content based on meaning rather than relying solely on exact keywords.

Prerequisites

-

To use the platform, you need an API key:

-

An MCP-compatible client (examples: Claude Desktop, Cursor, Windsurf, or Goose)

Set up the TwelveLabs MCP server

Follow the instructions in the TwelveLabs MCP server installation guide to add the server to your MCP client. The guide provides step-by-step instructions for the most commonly used clients.

Verify the installation

Test that your client recognizes the TwelveLabs MCP server:

Confirm that your assistant displays a list that matches the tools described in the Tools section.

If the TwelveLabs tools don’t appear when you ask to list them, try these steps:

Review the configuration: Open your MCP client settings and confirm the server configuration matches the installation guide exactly.

Check client logs: Look for error messages in your client’s logs or console output that might indicate connection issues.

Join our Discord server: If you still can’t get the server working, reach out to us in our Discord community for help with your setup.

Use the MCP server

You can now ask your AI assistant to perform video understanding tasks using natural language. Below are several examples of what you can do:

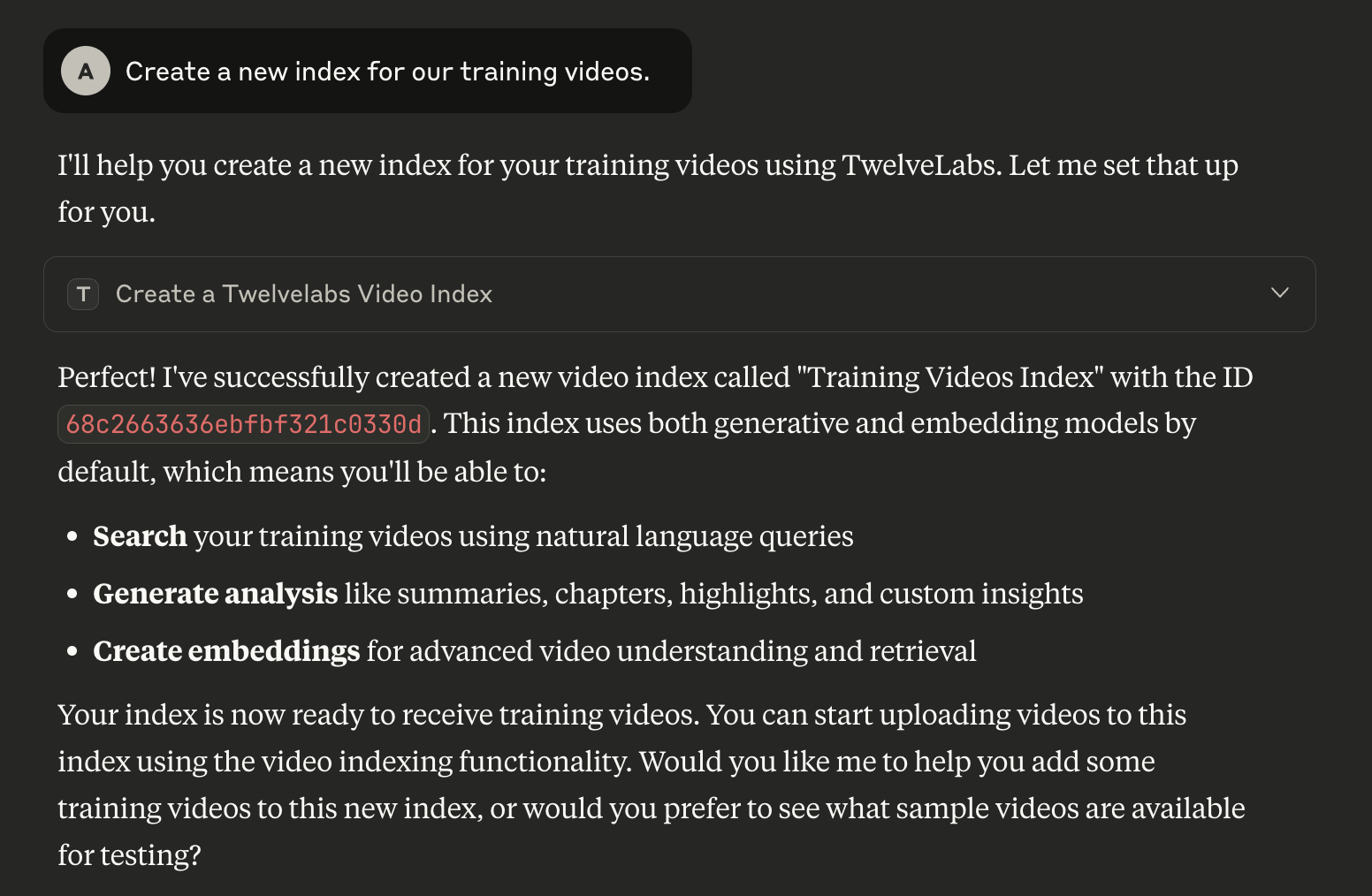

Manage indexes

- “Create a new index for our training materials”

- “List all available indexes”

- “Delete the <YOUR_INDEX_ID> index”

Upload videos

- “Index the meeting recording from this URL: <YOUR_URL>”

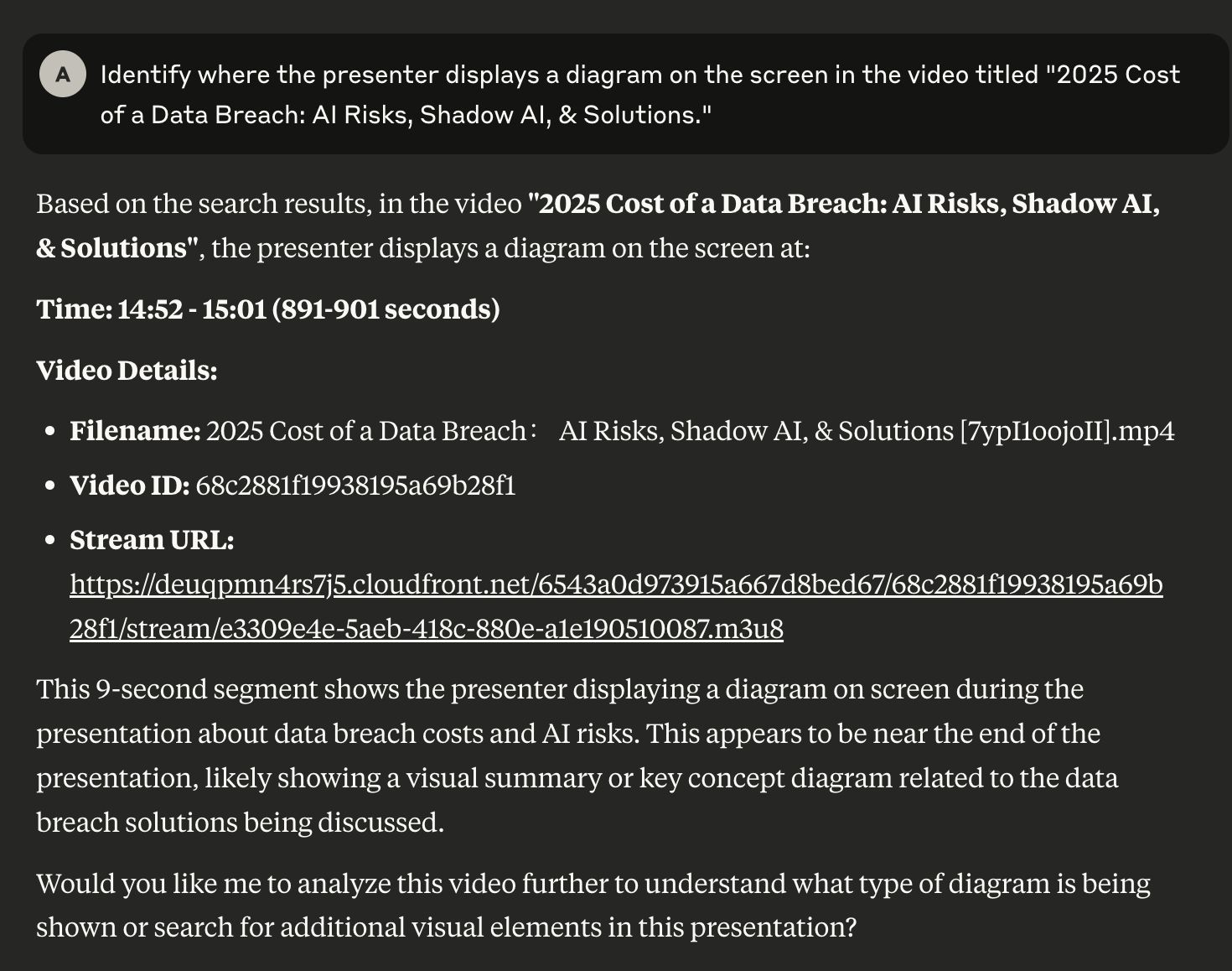

Search for specific moments

- Identify where the presenter displays a diagram on the screen in the video titled “2025 Cost of a Data Breach: AI Risks, Shadow AI, & Solutions.”

- “Search for all scenes where this product appears”

- “Locate fourth-quarter three-pointers in the game footage”

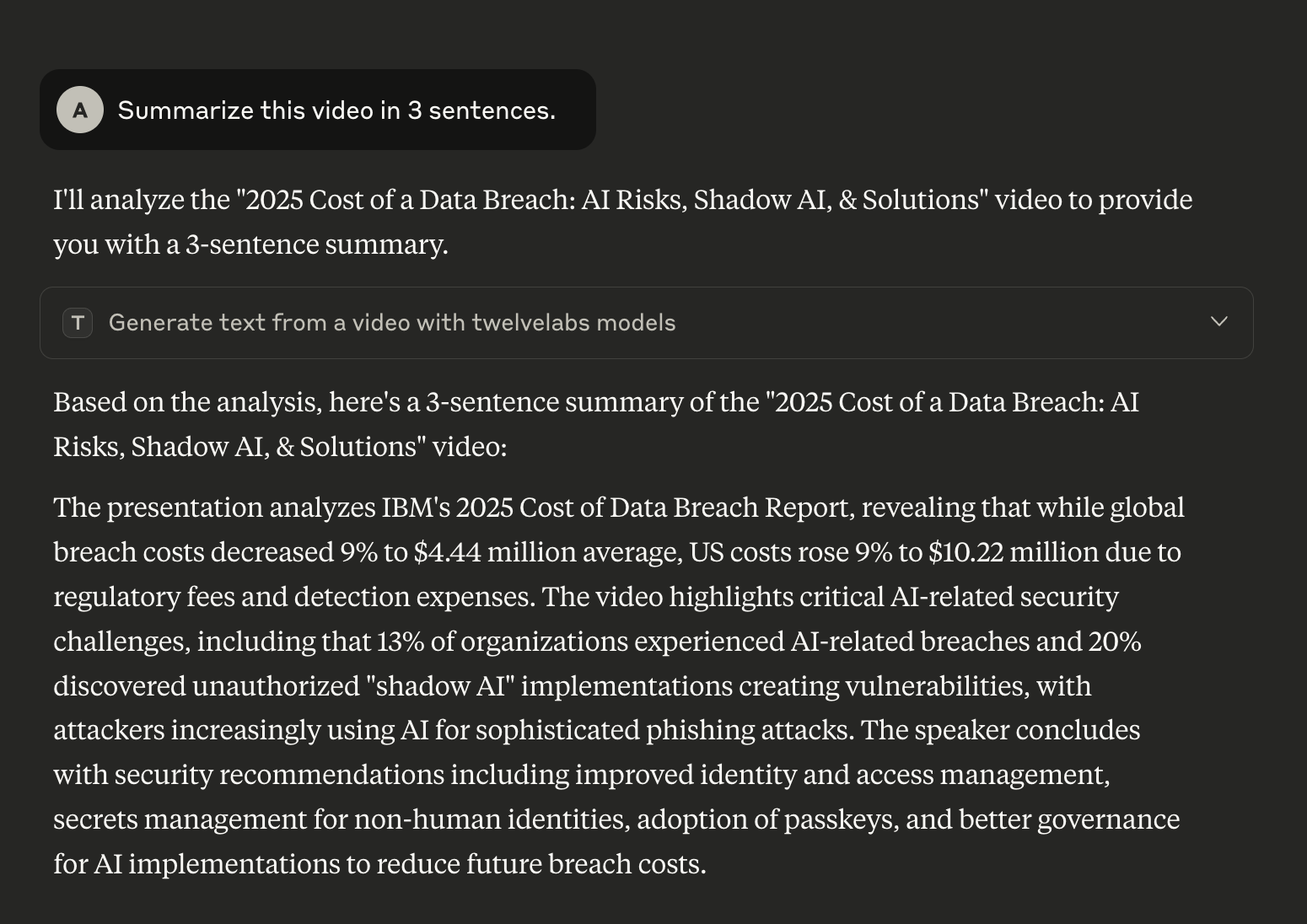

Generate video summaries

- “Summarize this video in 3 sentences”

- “Generate highlights from this presentation”

- “Create a brief overview of this training video”

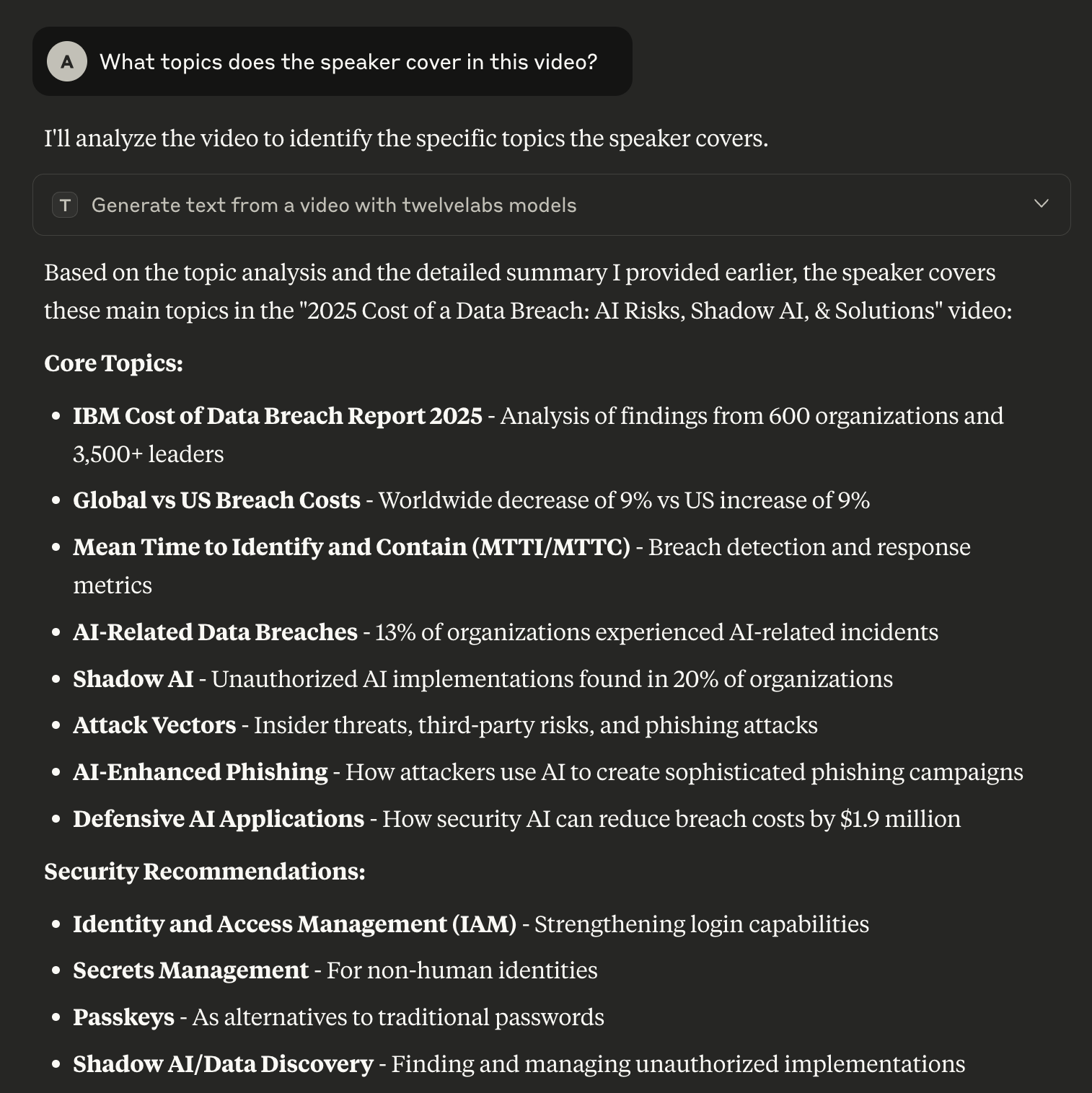

Ask questions about videos

- “What topics does the speaker cover in this video?”

- “When does the demo section begin?”

- “What are the key takeaways from this interview?”

Tools

The server provides the following MCP tools:

Next steps

After you complete the setup, you can build custom workflows that combine video analysis with other tools and integrate the capabilities of the TwelveLabs Video Understanding Platform into your existing AI applications.