Search with text, image, and composed queries

This guide provides an overview of using text queries, image queries, and composed text and image queries to search for specific content within videos. You’ll learn about the capabilities of each type of query, along with best practices for achieving accurate search results.

Text queries

Text queries allow you to search for video segments using natural language descriptions. The platform interprets your query to find matching content based on visual elements, actions, sounds, and on-screen text.

Note the following about using text queries:

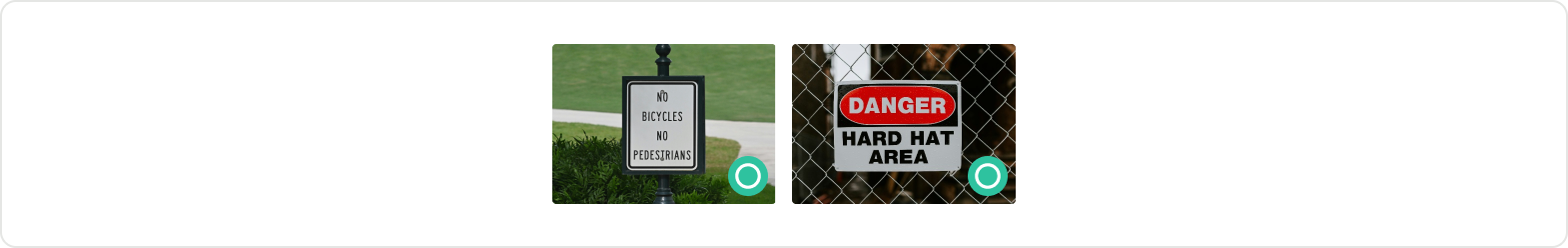

- The platform supports full natural language-based search. The following examples are valid queries: “birds flying near a castle,” “sun shining on the water,” “chickens on the road,” “an officer holding a child’s hand,” and “crowd cheering in the stadium.”

- To search for specific text shown in videos, use queries that target on-screen text rather than objects or concepts. Note that the platform may return both textual and visual matches. For example, searching for the word “smartphone” might return both segments where “smartphone” appears as on-screen text and segments where smartphones are visible as objects.

- To detect logos, specify the text within the logo. If the logo doesn’t contain text, you can search using image queries.

Image queries

Image queries enable you to search for video segments using images. The platform performs semantic searches to find content contextually similar to your query image.

Note the following about using images as queries:

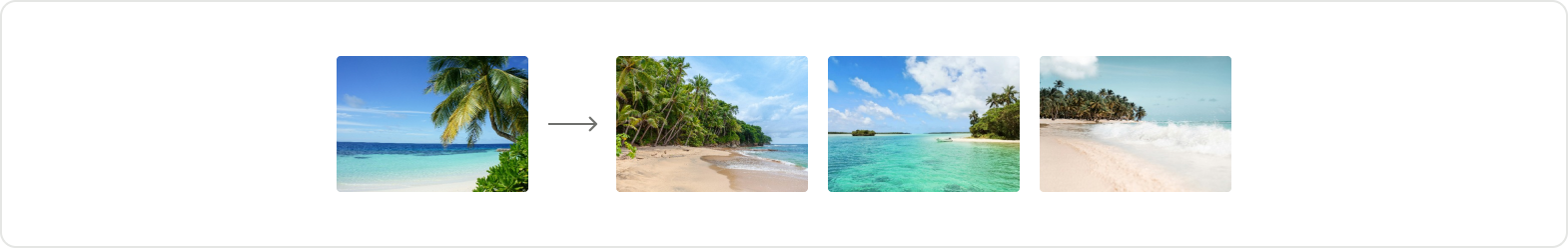

-

The platform supports only semantic searches. When performing a semantic search, the platform determines the meaning of the image you provide and finds the video segments containing contextually similar elements. For example, if you use an image of a tree as the query, the search results might contain videos featuring different trees, focusing on the overall characteristics rather than specific details.

-

The objects you want to search for must be sufficiently large and detailed. For example, using an image of a car in a parking lot is more likely to yield precise results than an image of a small branded pen on a table in a large room.

-

The platform does not support searching for specific words or phrases spoken or displayed as text within videos. For example, if you provide an image of a cat as your query and want to find all the video segments where the word “cat” is mentioned or appears on the screen, image queries cannot retrieve those results. Use text queries instead.

Composed text and image queries

Composed queries allow you to combine text descriptions with images for more precise search results. This approach enables you to specify visual references through images while adding textual criteria to refine your search.

Note the following about using composed queries:

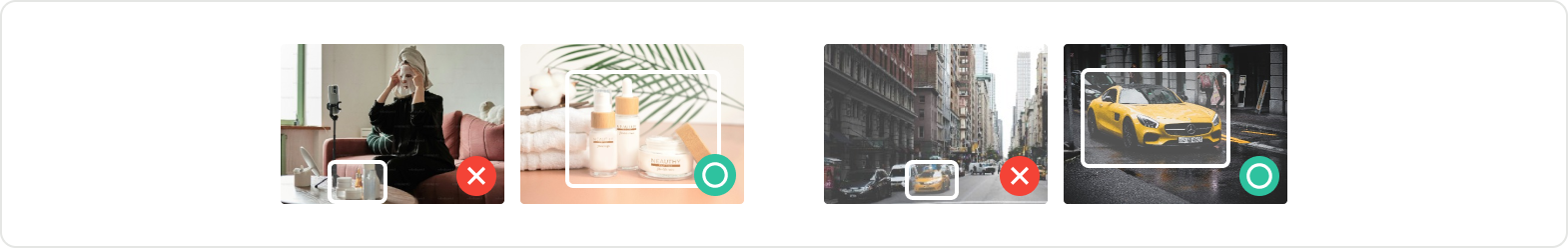

-

Composed queries work by combining the semantic understanding of your image with the specificity of your text. For example, if you provide an image of a car model and add the text “red color,” the platform returns only segments showing red instances of that specific vehicle.

-

Use composed queries when you need to narrow image-based searches with specific attributes. Common use cases include searching for objects with specific colors, identifying scenes with particular actions or contexts, and locating products with specific features or configurations.

-

The text component of the query refines the image results. The platform interprets both inputs together to find segments that match the combined criteria. For instance, providing an image of a building with the text “at night” returns segments showing that building during nighttime, not all buildings at night or that building at any time.

-

Composed queries are available only when using Marengo 3.0. Ensure your index is created with this model to use this feature.

Choose between text, image, and composed queries

Use text queries when:

- Searching for spoken words or phrases.

- Finding on-screen text, such as signs or captions.

- Describing scenes, actions, or concepts in natural language.

- Detecting logos with text.

Use image queries when:

- Finding visual content similar to an image you provide.

- Searching for objects, scenes, or visual patterns.

- Detecting logos without text.

Use composed text and image queries when:

- Searching for specific variations of objects shown in an image (such as color, size, or condition).

- Finding scenes that match both a visual reference and additional context.

- Narrowing image-based searches with specific attributes or characteristics.

- You have a visual reference but need to specify additional criteria that aren’t visible in the image alone.